Why Most AI Initiatives Fail in Manufacturing and How to Build Ones That Scale

What’s in This Blog?

- Why Most AI Initiatives Fail in Manufacturing

- 7 Reasons Most AI Initiatives in Manufacturing Fail

- How to Build AI Initiatives That Scale in Manufacturing

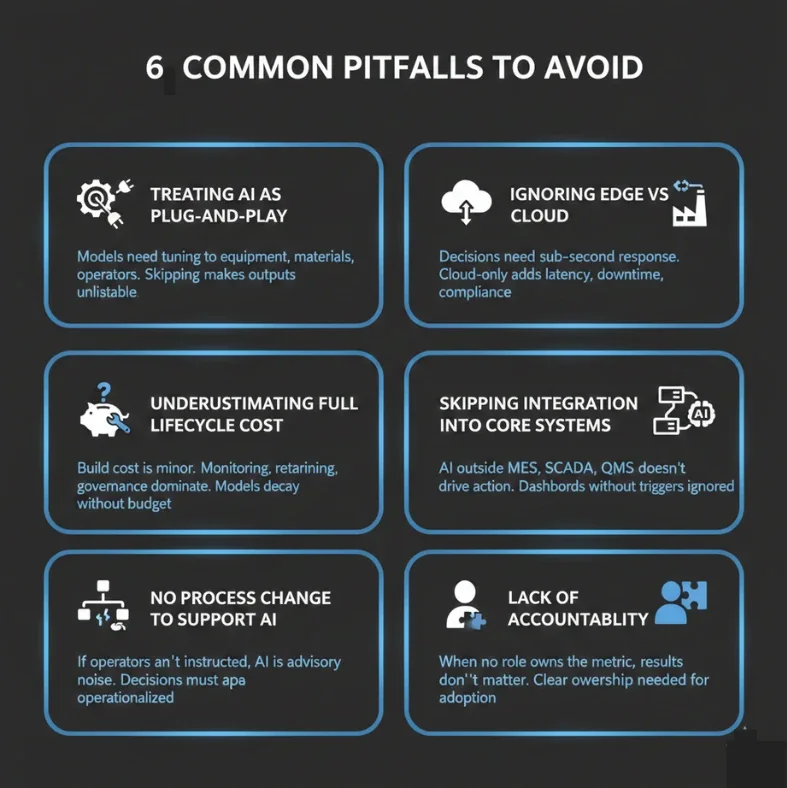

- Common Pitfalls and How to Avoid Them

- Frequently Asked Questions

Most manufacturing companies are spending millions on AI. Most of those investments won’t make it past the pilot phase.

The technology works. The problem is sequencing. Companies build models before they build the infrastructure to run them.

Successful implementations start differently. Data infrastructure comes before model development. design accounts for operational constraints. Systems are built to scale across use cases, not optimized for a single pilot.

This post breaks down why that happens. We’ll walk through the most common failure modes in manufacturing AI, how to spot them early, and the practical building blocks behind initiatives that actually scale.

Let’s dive in.

7 Reasons Most AI Initiatives in Manufacturing Fail

Manufacturing leaders expect AI to deliver major gains in productivity, predictive maintenance, and quality control. In reality, most initiatives fail to scale, with success rates often below 30%. Understanding the root causes helps you avoid these traps and build projects that deliver real results.

No Clear Business Problem

Many teams start with vague goals like “use AI” instead of targeting a specific issue that affects key metrics (e.g., reducing unplanned downtime by 20% or improving first-pass yield). Without a sharp problem statement, projects lose direction and executive backing quickly.

Bad or Inaccessible Data

Your data is often scattered across old systems, inconsistent, or full of gaps from sensor failures. Models built on this foundation give unreliable outputs, and operators stop trusting the system almost immediately.

Missing Input from Shop-Floor Experts

Data scientists working alone create models that look good on paper but ignore real production realities. Without close collaboration with process engineers and operators, solutions become unusable in daily operations.

Poor User Adoption on the Floor

Even accurate AI tools gather dust if workers do not trust or understand them. Operators resist when the system adds complexity, provides no obvious benefit, or raises fears about job security.

Stuck in Pilot Mode

A proof-of-concept may work perfectly on one line, but scaling across multiple plants fails when data formats differ, volumes increase, or hardcoded rules break in new conditions.

Weak Infrastructure and Integration

Legacy equipment, limited edge computing, and strict security policies block real-time deployment. Models that perform well in the cloud often suffer from latency or connectivity issues on the actual production line.

Lack of Long-Term Commitment

Leaders treat AI as a short-term experiment rather than a core capability. When early results fall short of hype or budgets shift, support vanishes before models can improve through ongoing retraining and refinement.

Recognizing these issues early lets you design AI projects with better odds of success. The next sections provide practical steps to address each one and create initiatives that scale reliably across your operations.

How to Build AI Initiatives That Scale in Manufacturing

You already know most AI projects in manufacturing fizzle out after the pilot. The difference between those and the ones that deliver millions in sustained value comes down to disciplined execution across the entire lifecycle. Successful teams treat AI as a production system, not a science experiment.

Here is the exact framework top-performing manufacturers use to move from one successful pilot to enterprise-wide deployment.

1. Start with a Measurable Business Win

Pick problems where AI can move a needle you already track. Frame every initiative around the smallest outcome that proves value fast.

Score opportunities by multiplying impact (dollars or percentage improvement) by feasibility (data readiness and team access). Focus on 2-3 use cases per year that can show results in 3-6 months.

Target metrics you own today: reduce MTTR by 15%, lift first-pass yield by 3%, or cut spare-parts inventory while maintaining service levels. Define baseline, target, and how you will measure it weekly.

2. Lock Down Your Data Foundation First

Nothing kills momentum faster than garbage data. Before any model work starts, build reliable ingestion and quality gates.

Connect OT sources (historians, PLCs, SCADA) to IT systems using proven patterns: edge gateways for real-time data, OPC UA or MQTT for streaming, and unified namespaces for context.

Agree on a data contract upfront: exact fields needed, maximum latency, sampling requirements, and quality thresholds (e.g., 95% completeness, defined outlier bounds). Automate validation so bad data never reaches the model.

3. Assign Clear Cross-Functional Ownership

AI in plants only works when everyone knows their role. Use a simple RACI.

Plant operations owns the business outcome and frontline adoption. Data engineers own pipelines and quality. The AI team owns model performance and iteration.

Put a lightweight governance process in place early: classify risk level, secure data access, keep audit trails, and review ethical impact on operators.

4. Treat Models Like Production Software

Deploy with proper MLOps from day one. Set up CI/CD for training, testing, and deployment.

Monitor everything that can break: data drift, prediction accuracy, inference latency. Trigger alerts and automatic retraining when thresholds are crossed.

Plan rollback procedures and version control. Tools like MLflow, Prometheus, and specialized platforms such as Superwise make this manageable without massive overhead.

5. Design for the Operator, Not the Data Scientist

The best model is useless if operators ignore it. Embed outputs directly into existing HMI, SCADA, or mobile tools they already use.

Show clear, actionable insights: what is happening, why it matters, and what to do next. Include simple explanations (top contributing factors) so operators can build trust.

Follow human-factors principles: avoid alert fatigue, provide context, and support decisions rather than replace them.

6. Choose the Right Buy-vs-Build Path

For standard problems like predictive maintenance on common equipment, packaged solutions from established vendors often win on speed and support. Go custom only when the process is truly proprietary and differentiates your business.

When evaluating vendors, demand straight answers on inference latency SLAs, data ownership, update cadence, OT integration, and your right to audit performance.

7. Measure Both Business and Technical Health

Track two separate dashboards.

- Business dashboard (monthly to leadership): actual impact on MTTR, yield, OEE, scrap, or inventory dollars.

- Technical dashboard (weekly to the team): model precision, latency, drift scores, pipeline uptime.

Review both together quarterly and adjust priorities based on what is actually moving the needle.

8. Build a Repeatable Scaling Playbook

Scaling fails when every new plant reinvents the wheel. Create reusable assets from your first success.

Standardize data schemas, pipeline code, feature definitions, model templates, and operator guides. Run a structured “scale sprint” for each new site: verify data consistency, adapt for local differences, retrain on combined data, pilot on one line, then roll out.

A small central Center of Excellence keeps templates current and accelerates knowledge transfer. Follow this sequence, and you shift AI from a high-risk experiment to a predictable capability that compounds value year after year. Start with your next initiative by nailing components 1-3; everything else flows from there.

Common Pitfalls and How to Avoid Them

These are not beginner mistakes. These are the exact traps that stall AI programs after the first deployment.

Wrapping Up

Most AI initiatives in manufacturing fail for one reason: they are treated as technology projects instead of operational systems. Scalable AI starts with a clear production problem, reliable data, and defined ownership on the shop floor.

It requires deployment-ready architecture, lifecycle management, and integration into daily workflows. When AI decisions are measurable, trusted by operators, and maintained over time, they deliver real impact.

Most manufacturing AI initiatives fail for the same few reasons.

The Commerce Shop identifies where your initiative breaks and designs a path to deployment, adoption, and scale.

Frequently Asked Questions

Why do most AI pilots in manufacturing never scale?

Because pilots are built to prove models, not to integrate into MES, workflows, and operator routines. Without deployment planning, pilots stall.

Do manufacturers need perfect data before starting AI?

No. But data must be consistent, contextual, and usable for decisions. Poorly structured data leads to unreliable outputs.

Should AI be deployed at the edge or in the cloud?

It depends on latency and reliability needs. Real-time control and inspection often require edge deployment, while optimization can run in the cloud.

Who should own AI initiatives in manufacturing?

Operations must own the outcome. IT and data teams support execution, but plant leadership drives adoption and results.

- Artificial Intelligence (1)

- B2B (3)

- manufacturers (9)