AI Without Disruption: A Practical Playbook for Manufacturing Leaders

What’s in This Blog?

- Why AI Fails on the Manufacturing Floor

- Why Manufacturing Still Runs on Manual Workarounds

- The Cost of Manual Gaps in Operations

- Check This Before Rolling Out AI in Operations

- Mapping Manual Gaps to AI Capabilities

- A Step-by-Step Roadmap to Deploy AI Without Disruption

- Common AI Failure Points Manufacturing Leaders Should Watch

- How to Measure AI Success in Manufacturing

- Wrap Up

Over 70% of AI initiatives in manufacturing never move beyond pilots. Not because the models fail, but because production teams won’t tolerate disruption.

Manufacturing runs on tight margins and zero downtime tolerance. When AI adds steps, slows decisions, or changes workflows before proving value, it gets rejected. Most failures follow a similar pattern: tools are chosen before gaps are identified, dashboards are created before decisions are made, and pilots are implemented before operator trust is established.

There’s a more practical approach. Start with the manual gaps leaders already manage around. Apply AI only where it fits existing workflows. Scale only after it proves it won’t disrupt the floor.

This post breaks down those gaps, identifies what AI can realistically replace, and provides a step-by-step roadmap for deploying AI without disruption.

AI works when manufacturing decisions are grounded in operations.

Many teams adopt AI before addressing the constraints that limit throughput and efficiency. Speak with manufacturing growth experts who align AI initiatives to production, cost, and execution outcomes.

Why Manufacturing Still Runs on Manual Workarounds

Manufacturing stayed manual, not because leaders resisted change, but because stability mattered more than experimentation. When uptime, safety, and delivery are non-negotiable, predictability wins, even if it creates inefficiencies.

Most plants still accept the same gaps.

- Shift handovers depend on people, not systems, so critical context disappears between shifts.

- Maintenance decisions rely on experience instead of real-time signals, leading to reactive fixes.

- Quality checks happen after defects occur, making scrap and rework feel unavoidable.

- Production planning stays reactive, adjusting only after delays surface.

- Data lives in silos across OT, MES, and ERP, preventing a shared operational view.

These gaps persist because automation has historically felt risky. IT and operations rarely move together. Past digital projects disrupted production without improving execution.

The Cost of These Manual Gaps (Where Leaders Lose Money)

Manual gaps don’t appear as single failures. They create repeatable cost patterns across operations.

Unplanned Downtime

When shift handovers and maintenance rely on people instead of systems, early warning signals are missed. Issues surface during production, not before, turning minor deviations into downtime events.

Scrap and Rework

When quality checks happen after production, defects move downstream. Material, labor, and machine time are consumed before problems are visible, locking in losses.

Missed Delivery Dates

Reactive planning means schedules change after constraints appear. Teams spend time expediting instead of stabilizing flow, hurting customer commitments.

Excess Inventory

Inventory grows as a hedge against uncertainty. Stock replaces visibility, tying up working capital without reducing variability.

Operator Fatigue and Decision Overload

Operators compensate for system gaps by making judgment calls with incomplete data. Fatigue increases, consistency drops, and errors follow.

The disruption is already happening. AI doesn’t introduce risk: it exposes where cost and control are already leaking.

Check This Before Rolling Out AI in Operations

(Readiness Checklist Before Introducing AI -This is how you avoid disruption.)

AI works only when it’s applied to processes that already behave predictably. Before introducing it on the floor, pressure-test your readiness with these questions.

Is this process already under control?

If performance swings day to day, AI won’t stabilize it. It will mirror the instability.

Who is accountable for outcomes, not tools?

If ownership is shared or unclear, issues will linger. AI needs a single operational owner.

Are signals already being captured today?

If AI depends on new data entry or manual work, adoption will break. It should consume what the process already generates.

Can this run alongside production without interrupting it?

If a failure can stop the line, the system isn’t ready for live operations.

Can success be measured in operational terms?

If you can’t tie it to downtime, scrap, throughput, or labor efficiency, don’t deploy it.

If most answers aren’t yes, pause. Fix the process first. AI should reduce risk, not introduce it.

Mapping Manual Gaps to AI Capabilities

This mapping shows where AI creates leverage without forcing teams to relearn how the plant operates. The process remains primary. AI fills specific gaps quietly.

Manual Inspections → AI Vision Systems

Visual inspections stay at the same points on the line. AI models analyze camera feeds continuously and surface anomalies earlier than human-only checks. Operators retain decision authority. No workflow change is required.

Reactive Maintenance → Predictive Maintenance Models

Maintenance teams continue following existing schedules and work orders. Predictive models analyze machine signals and historical failures to flag risk earlier, helping teams plan interventions instead of reacting to breakdowns.

Spreadsheet Planning → AI-Assisted Scheduling

Planners keep their tools and ownership. AI evaluates constraints, sequences, and scenarios in the background, recommending better plans without auto-executing decisions.

End-of-Line Quality Checks → In-Line Defect Detection

Quality processes remain intact. AI shifts detection upstream so issues are identified closer to the source, reducing scrap and rework without changing approval or release steps.

Across all cases, the rule is consistent: AI augments decisions, fits into existing workflows, and avoids forcing behavioral change on the floor. That’s how leaders apply AI without disruption.

A Step-by-Step Roadmap to Deploy AI Without Disruption

This roadmap is designed for leaders who want results without risking uptime or credibility on the floor.

Step 1: Start With One Costly Problem

Choose a problem that already hurts output, cost, or reliability: downtime, scrap, missed schedules. If the issue isn’t visible in daily reviews, AI won’t make it important. Start with a single line or process. Multi-site rollouts dilute focus and slow learning.

Step 2: Use Existing Data First

Begin with the data you already generate: machine signals, quality logs, MES events, ERP records. Waiting for “perfect data” or new infrastructure delays value and increases resistance. Early wins come from imperfect but usable signals.

Step 3: Deploy AI as Decision Support

Start with alerts, risk scores, and recommendations. Avoid automated control initially. Let teams see how AI behaves before it influences production decisions.

Step 4: Validate With Operators

Operators confirm whether outputs make sense. Their feedback improves accuracy and builds trust. If the floor doesn’t believe it, it won’t scale.

Step 5: Scale Gradually

Expand line by line, then site by site. Keep the same process, metrics, and ownership. Scale discipline prevents disruption.

Common Failure Points Leaders Should Watch For

Most AI failures in manufacturing come from execution choices, not technology limitations.

Starting with dashboards instead of decisions

When outputs are limited to dashboards, teams see information but do not act on it. If no decision changes on the floor, AI adds visibility without impact.

Expanding too fast after a pilot

Pilots succeed in controlled conditions. Scaling before ownership, workflows, and metrics are stable introduces risk across operations.

Letting vendors define success

Vendors focus on deployment and model performance. Leaders must define success using operational outcomes such as downtime, scrap, and throughput.

Ignoring ongoing model upkeep

Data and operating conditions change over time. Without regular review and adjustment, accuracy declines and trust fades.

Treating AI as an IT initiative

When AI is owned only by IT, adoption stalls. Operational leaders must own outcomes, with IT supporting integration and reliability.

These failure points build gradually. Catching them early prevents disruption and protects results.

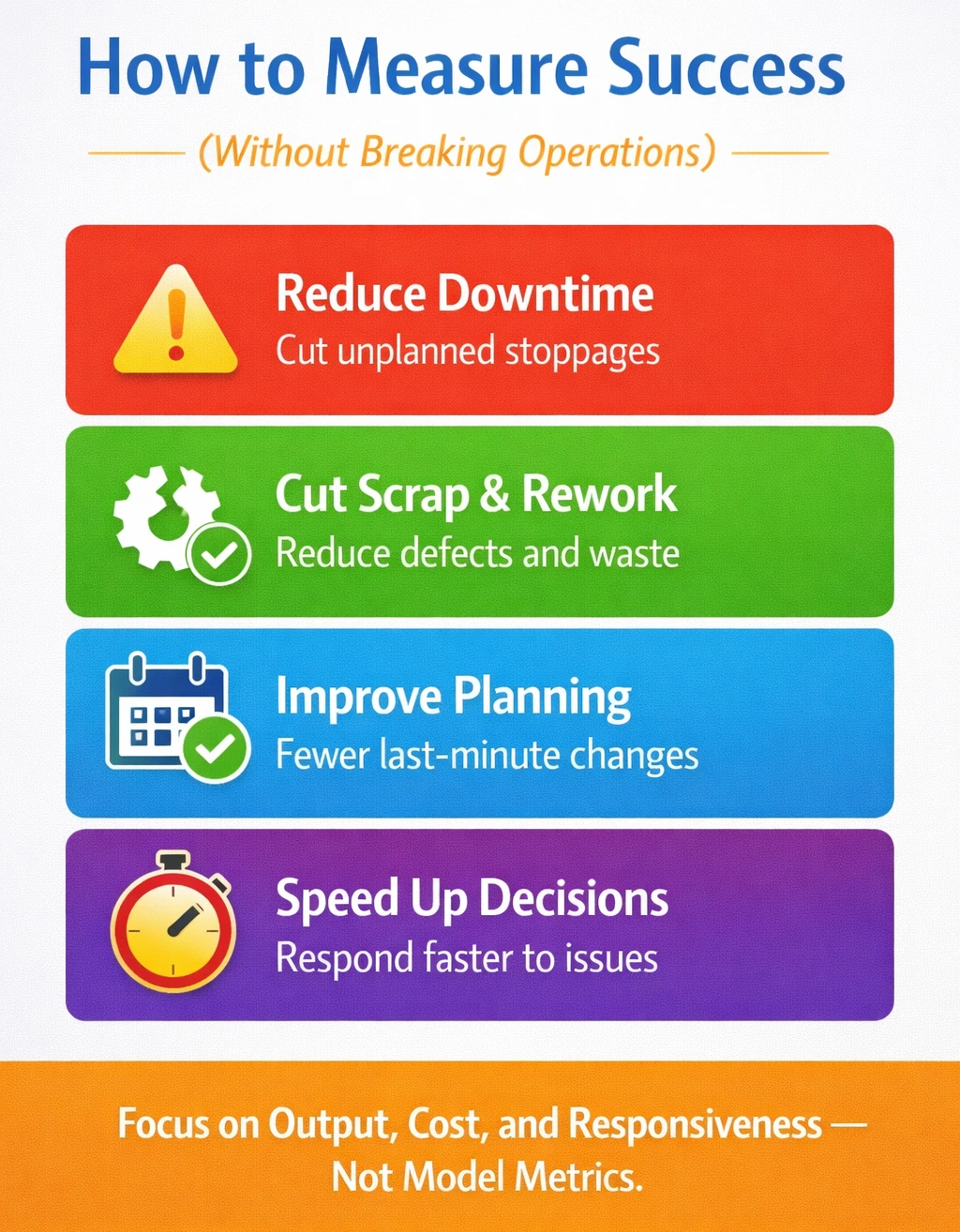

How to Measure Success (Without Breaking Operations)

If AI success is measured in model metrics, the project is already off track. Manufacturing leaders should measure AI the same way they measure operations: by impact on output, cost, and responsiveness.

Avoid vanity metrics. Model accuracy, confidence scores, or dashboards mean nothing if production outcomes don’t improve. If results aren’t visible in daily ops reviews, AI isn’t delivering ROI.

Wrap Up

AI does not fail in manufacturing because the technology is weak. It fails when it is applied without discipline. Leaders who succeed start with stable processes, clear ownership, and problems tied directly to cost or output.

Effective deployment is practical. Use existing data. Introduce AI as decision support before automation. Validate outputs with operators. Scale only after results are consistent. Measure success in downtime reduction, scrap reduction, throughput, and response time, not model metrics.

AI should strengthen execution without disrupting operations. When treated as part of the operating system rather than an experiment, it delivers measurable value and sustained control.

- Artificial Intelligence (1)

- B2B (3)

- manufacturers (9)